Introduction

Choosing the appropriate evaluation method is a pre-requisite for credible, independent and useful evaluations in the United Nations and beyond.

On 8th September, the UN Evaluation Group presented at the 2021 European Evaluation Society Conference the latest guidance document on methods prepared by its Working Group on Evaluation Methods: The Compendium of Evaluation Methods Reviewed (http://www.uneval.org/document/detail/2939).

The document presents the highlights of a year’s work by the Group via a series of monthly seminars and comprises a summary of seven different main evaluation methods applied to concrete interventions by the UN across the full range of its activities.

The methods reviewed were the following:

- Methods Supporting Evaluation Design: Evaluability Assessment, Theory of Change and Storyline Approaches

- Synthesis and Meta-Analysis

- Contribution analysis

- Qualitative Comparative Analysis

- Randomised Controlled Trials and Quasi-Experimental Designs

- Outcome Harvesting/Outcome Evidencing

- Culturally Responsive Evaluation

In addition to showcasing different ways that specific evaluations were designed in response to the evaluation challenge encountered, the Compendium also highlights the conceptual basis and differences between each method. In this way, the intention of the Group’s work – and this publication – is to increase participants’ knowledge of evaluation methods in a way that can be easily replicable by others.

The Compendium was conceived as the first in a series of Guidance documents and it will be updated and built upon as the work of the group continues.

The session at the EES was run as part of Theme 2 (Adapting the toolbox: methodological challenges) and was attended by 64 participants. The presenters chose two of the methods highlighted in the Compendium: contribution analysis and evaluation synthesis, to take a deep dive into their application in the UN system.

Contribution analysis

Initially developed by John Mayne, contribution analysis (CA) is an approach for assessing causal questions and inferring causality in real-life programme evaluations.[1] It offers a step-by-step approach designed to help policymakers arrive at conclusions about the contribution their programme has made (or is currently making) to development outcomes alongside the contributions of others.

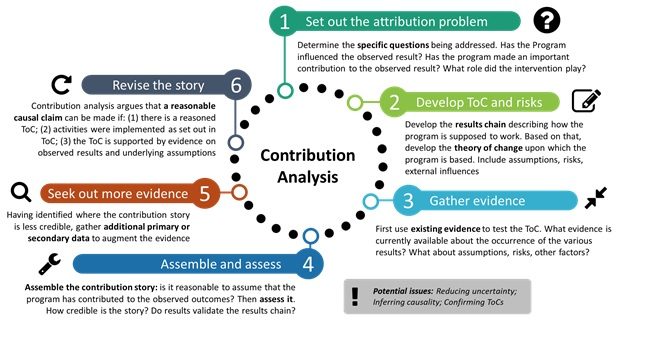

Following Mayne’s approach, and as set out in the Figure below, six steps are followed to design and conduct a credible evaluation using contribution analysis. These steps are often part of an iterative approach to building the argument around an intervention’s contribution (or not) to a broader outcome alongside the contribution of others, and exploring how and why such a contribution was made.

Source: Authors’ elaboration from Mayne (2008) and Betterevaluation.org

The example of the mid-term evaluation of UNCDF’s Mobile Money for the Poor (MM4P) programme[2] demonstrated that if one can i) verify or confirm a theory of change with empirical evidence—that is, verify that the steps and assumptions in the intervention theory of change were realized in practice, and ii) account for other major influencing factors— then it is reasonable to conclude that the intervention in question has made a difference, i.e. was a contributory cause for the outcome – in this case better-functioning (digital) inclusive finance systems for the poor in the Least Developed Countries (LDCs).

The validated theory of change provides the framework for constructing a plausible argument that the range of programme interventions is making a difference, with the evaluation questions and different lines of evidence generated by the different evaluation tools and techniques helping to develop the contribution story.

Advantages

Session participants noted that CA offers an approach designed to reduce uncertainty about the contribution any intervention is making to observed results through an increased understanding of why the observed results have occurred (or not), and the roles played by the intervention and other internal and external factors. Further, it was discussed that the CA approach can also help inspire a more rigorous approach to monitoring and reporting results during programme implementation, particularly in cases where there are potentially multiple drivers of change in complex social and economic systems, which can help programme specialists report the results of their work more accurately during implementation.

Disadvantages

Whereas contribution analysis helps confirm or revise a theory of change; it is not intended to be used to uncover and display an implicit or inexplicit theory of change. The report from a CA is not definitive proof, but rather provides evidence and a line of reasoning from which we can draw a plausible conclusion that, within some level of confidence, the program has made an important contribution to the documented results.

Based on their experience, UNCDF shared that some challenges might arise – such as the existence of appropriate, pre-existing secondary data – when using CA with regards to:

- Reducing uncertainty about the contribution the intervention is making to the observed results.

- Inferring causality in real-life program evaluations.

- Confirming or revising a programme’s theory of change – including its logic model.

In addition, CA is “agnostic” in terms of which evidence matters, since its key is to test alternative explanations. Alternatively, it can become prone to confirmation bias, thus falling into the typical theory-based evaluation problem of collecting evidence to corroborate one’s prior ideas only. Evidence needs to be collected for both the main analysis and alternative explanations, using multiple lines which can increase the cost and length of time needed for the evaluation.

Evaluation synthesis

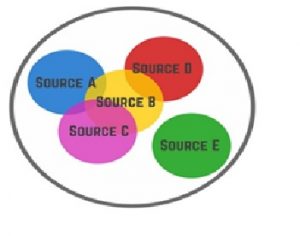

Evaluation syntheses combine existing evaluation knowledge and findings relevant to a topic in the form of new knowledge products. This supports dissemination of evaluation results and helps identify areas of concern for new evaluations. It addresses the challenge of “information overload”, delivering products that distil relevant evidence for decision-making, and are conducted following a well-structured process.

Using the example of UNDP Reflections[3], colleagues from UNDP walked participants through the methodological steps of producing lessons following rapid evidence assessment. “Lessons learned from UNDP support to digitalization” were used to discuss some of the pitfalls and challenges with formulating ‘good practices’ and ‘lessons’ as opposed to evaluation findings.

In the discussion, participants identified some of the advantages and disadvantages of evidence synthesis and shared practical examples.

Advantages

- Savings in direct and indirect costs (both for the evaluator and for the groups being evaluated).

- Focuses on analyzing structural/common factors of success/failure.

- Increases perception of robustness as it builds on previous findings, instead of starting from scratch.

Disadvantages

- Restricted access to/availability of suitable evaluations (i.e. those that have assessed the same subject, in similar contexts and with the same objectives) may affect the feasibility/quality of a synthesis.

- Resources and time available for synthesis work.

- Systematic review methods can be complex (such as comprehensive search methods and meta-analysis).

Suitability

This method has several possible applications.

- Can be applied to inform the design of a new evaluation: A synthesis of previous evaluations’ findings on the theme and/or geographic region subject to evaluation can inform discussions on the objectives and scope of a new evaluation.

- Can supplement the evidence gathered by an evaluation: A synthesis of previous evaluations’ findings on the theme and/or geographic region subject to evaluation can be used for triangulation purposes and/or fill in gaps in evaluative evidence.

- Can avoid the need for a new evaluation: When sufficient evidence exits, a synthesis can satisfy the information needs of decision-makers, without the need for additional primary data collection.

The method should not be used in the following cases:

- When there is not enough evidence available for synthesis.

- When users expect primary data as main source of evaluative evidence.

Conclusions:

The presentation was well received by the participants, who expressed higher levels of familiarity with contribution analysis than with evaluation synthesis (41 out of 54 poll participants indicated to be either very or somehow familiar with contribution analysis, compared to 36 out of 51 in the case of evaluation synthesis). There was a lively discussion and examples were provided on how evaluation units in different UN agencies use these methods, as well as on how some bilateral agencies (such as the Swedish International Development Agency) and large non-governmental agencies (such as Doctors without Frontiers) can apply them in their work.

[1] http://www.uneval.org/document/detail/2939 (page 14)[2] https://erc.undp.org/evaluation/evaluations/detail/10018

[3] http://web.undp.org/evaluation/reflections/index.shtml

Authors

Carlos Tarazona, Senior Evaluation Officer UN Food and Agriculture Organisation’s Office of Evaluation

Andrew Fyfe, Evaluation Unit Head, UN Capital Development Fund

Tina Tordjman-Nebe, Senior Evaluation Specialist, UN Development Programme’s Independent Office of Evaluation