Transformation is hard but evaluation can help; how evaluators can contribute to the development of a multi-agency advisory service (MAAS) to support innovators with the development of AI and data-driven solutions for health and social care

By Tom Ling, EES President-elect and Head of Evaluation at RAND Europe

When did you last read an evaluation report and think ‘I understand completely what happened, how it could be improved, and what we need to do next’? On the other hand, how often have you read an evaluation of a transformation and been told ‘it’s all very complicated and context-dependent’ and ‘the world is indeed a mysterious place requiring further research’? If your answers are ‘not often’ to the former and ‘often’ to the, latter read on!

At the recent European Evaluation Society (EES) Online Conference, which I co-hosted, one of the big discussion points was how to support and evaluate transformations. ‘Transformations’ may be large or small, but transformation always involves a step-change in the way services and resources are delivered or used. A caterpillar turning into a butterfly is a transformation; a thin caterpillar becoming a fat caterpillar is not. Transformations might involve one or more of the public, private or third sectors but rarely involve just one organisation. They often respond to the dilemmas that governments face when balancing fiscal pressures spend less while experiencing political pressure to deliver more. One perceived way out is ‘transformation’. Much might be expected from transformation, but it comes with no guarantee of success. At the EES online conference, we asked: ‘how can evaluation better support and measure transformation?’

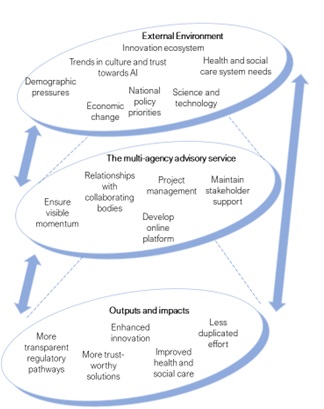

In this blog, we respond to this question by considering an initiative we at RAND Europe are evaluating; the creation and development of a new multi-agency advisory service (MAAS) to support the development and adoption of artificial intelligence (AI) and data-driven technologies in health and care. The long-term aim of the service is to improve quality of care for all by ensuring that AI and data-driven innovations for people access through the health and social care system, are both available and meet high safety standards. It will seek to achieve this by providing service users with clarity, transparency and direction on the regulation, evaluation and adoption of AI and some data-driven technologies – making pathways easier to follow and expectations clearer for all. The four national bodies collaborating to develop the MAAS service are:

- The National Institute for Health and Care Excellence (NICE), who produce evidence-based guidance and advice for health, public health and social care practitioners.

- The Medicines and Healthcare products Regulatory Agency (MHRA), who regulate medicines and medical devices – including software as a medical device.

- The Health Research Authority (HRA), which protects the rights of patients, including by regulating the use of data collected within health and care for research and product development.

- The Care Quality Commission (CQC), the independent regulator of health and social care in England.

The NHS AI Lab – NHSX is providing funding and oversight. The four partners are developing the service while key stakeholders include those developing innovations, and health and social care providers. The ultimate beneficiaries of the service are patients and public.

To be clear, the MAAS is not intended to be a transformation of the whole regulatory system. Instead, it aims to transform the availability and clarity of advice and support so that the system works better for developers and adopters of AI and data-driven technologies. For example, it will not change in any fundamental way the statutory obligations and powers of the four national bodies, but it will (if successful) enable these four partners to work more cohesively, transform how innovators engage with regulation and reduce barriers to innovation in AI and data-driven solutions in health and social care systems. Transforming this part of the regulatory system involves a high level of understanding of existing regulatory requirements, effective collaboration between the four partners, a knowledge of new AI and data-rich technologies, and a technical ability to build a (mostly online) platform for advice and guidance.

Furthermore, in a rapidly changing and uncertain environment, learning and adaptation will be critical for long term sustainability. Adding to the richness of the evaluation, is a recognition that this initiative – the MAAS – is only one of a number of initiatives intended to be mutually reinforcing and supportive for AI developers and adopters. Related initiatives, for example, include improved information governance, ‘digital playbooks’ to help clinical teams understand how to take advantage of digital solutions, other work of the NHS AI Lab and many more efforts. The MAAS is therefore intended to be a transformation within a wider set of changes which will also shape the intended impacts of MAAS – while MAAS has no direct control and only limited influence over these. How these wider changes will unfold – and with what consequences for the MAAS – cannot be predicted with certainty. Instead, we are evaluating a process of learning and adapting through which (it is hoped) the caterpillar might become the butterfly, providing advice and guidance so that the system can be more easily understood and navigated. If successful, this could have a multiplier effect in which the benefits ripple outwards stimulating more innovation, investment and adoption of AI and data-driven solutions in health and care with wide ranging benefits for patients and public.

But more immediately, the evaluators have a role to play in not only documenting progress but also informing that progress through mapping and analysing available evidence from stakeholders (for example through information provided by platform users, surveys, interviews and focus groups). The evaluation should also provide independent judgements about the effectiveness of individual steps being taken along the journey with a view to assessing its contribution to the transformation ambition. This suggests a more embedded role for the evaluator than might be found in a straightforward summative evaluation of a simpler project.

The RAND Europe evaluation team has only been working with the MAAS team and its stakeholders for a few months. However, we think some lessons have wider implications for our future work and for other evaluations of transformational projects. These are not our evaluation findings but represent initial reflections on our role:

- Involve your evaluators early to build shared knowledge across collaborators

The MAAS team invited tenders for this evaluation early in the service development process. At the start of most transformation initiatives there is an impulse to change direction and an injection of energy. This may, for example, come from senior political concerns, and these in turn often reflect the anxieties of innovators, service deliverers, or worries bubbling up from service users and public. However, knowledge about what transformation should and could involve is likely to be fragmented and distributed throughout stakeholders. Really important experiential and technical knowledge and insights might be locked away in different parts of the system such that the system cannot talk to itself. Different stakeholders might understand some aspects of what is possible but very often none of them understand the whole picture. For example, providing a regulatory response to predictive analytics to assess risk and trigger a response requires expertise in AI but also a deep understanding of the settings where it would be delivered, the preferences of service users, the experiences of professionals, and an understanding of wider issues such as the impact algorithms might have on inequalities. Most likely, there is no formal and documented body of knowledge to support the transformation in question.

In our experience from evaluating similar projects, this initial phase can also surface inter-organisational tensions and competing priorities. In the case of MAAS, the four partners have had to develop a shared understanding, going beyond simply agreeing a vision statement. Involving an external evaluator early on can help to map out the necessary causal pathways and draw out the knowledge and expertise of stakeholders along these routes to impact. Most often (as with MAAS) this involves the co-production of a theory of change. This is critical for framing the evaluation, but equally for supporting a shared understanding across stakeholders that under-pins effective collaboration. However, it would be naïve to expect that this shared understand necessarily dissolves differences. In the MAAS, for example, different stakeholders have different views on how best to balance short and longer-term aims which we are working to resolve.

- Put boundaries round the evaluation and pay attention to what a transformation initiative can hope to influence most

This point was clearly articulated to us in our interviews and workshops with the four MAAS partners who reminded us of the importance of not evaluating the whole system (or especially systems of systems) within which the MAAS operates. Trying to evaluate the whole innovation eco-system, including the absorptive capacity of the health and social care system to use AI and data-intensive solutions, would inevitably exceed the brief of the evaluation and provide too wide a canvas to provide detailed evaluative insight.

Any effort to focus constantly on ‘systems within systems within systems’ could quickly become unmanageable for the evaluation and unhelpful. Instead, and with encouragement from the MAAS partners, we have focused on what the initiative can most influence and what would help them most in order to achieve their aims. The evaluation team can helpfully focus by asking the question ‘what information would most help you to deliver your aims?’

- A focus on learning should be anchored within an understanding of how stakeholders intend to achieve impact to avoid an endlessly measuring change but never improving understanding

The evaluation was commissioned as a formative evaluation in order to support learning and adaptation. This is especially appropriate where there is considerable uncertainty about what works and how the context is changing (in other words, both causal and empirical uncertainty). In our wider work, we have found that stakeholders want to understand very practical questions of how to get to their intended goals quicker and more effectively. In the short run this reinforces the benefits of having a theory of change – even when it is understood that this theory of change will inevitably evolve during the lifetime of the initiative. The theory of change anchors learning in an understanding of how to achieve the intended change. It therefore helps set the scene from early on for a summative evaluation which will explore how far the intended goals were achieved. Without this we risk having projects with ‘eternal emergence’ where we just learn an endless sequence of new things with no accumulation of insight or knowledge. Added to this risk, unless we anchor the evaluation in what was intended, we lose sight of accountability. In the case of our evaluation of MAAS, we see the arc involving a developing review of progress. This is likely to culminate in designing a formal proposal for how to frame a summative evaluation that is firmly aimed at identifying results.

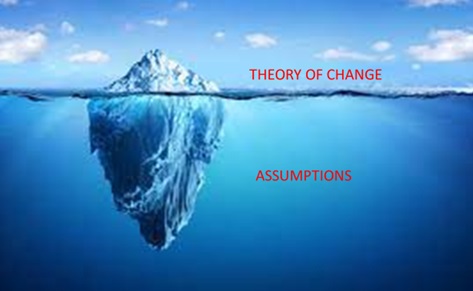

- Don’t be afraid of depth

Theories of change can easily become very superficial descriptions of a desired sequence of events. It may in some circumstances (as with MAAS) be possible to find agreement on what this looks like at the level of a theory of change. Achieving deep agreement, however, involves exploring the underlying assumptions that inform how these sequences (or the mini-causal pathways that collectively describe the initiative) are supposed to work. The theory of change can be visualised as the tip of the iceberg, visible above the water, while the underlying assumptions make up the much larger part of the whole but are less visible below the water line.

For example, the initiative makes the (not unreasonable) assumption that if innovators are provided with improved information, advice and guidance they will be motivated to increase the flow of better-informed solutions and that they will have the capacity to bring these to market. By focusing on this assumption, we can seek to understand how well to the MAAS collaborators understand the needs, capacities and motivations of innovators in order to target their advice and guidance more effectively.

Concluding thoughts

I believe that the MAAS collaborators have been strategically wise in their approach to the evaluation. The Evaluation Team has been brought in early and with a specific aim to initiate a formative, process evaluation that will lead to a design for a full summative evaluation. The evaluation team, the AI MAAS partners, and wider stakeholders have invested in co-developing a theory of change (through interviews and workshops) that helps to build agreement and to anchor learning. Through this work the importance of exploring ‘deep’ assumptions has been recognised. There are many factors which may impact on how successful the whole initiative is, but the shared commitment to developing a theory of change while exposing and exploring deep assumption is an important first step in the right direction.

There are important issues at stake. Evaluation has conventionally been focused on programmes or projects designed to achieve incremental improvements delivered through a small group of actors. The processes are largely linear. The AI MAAS, by contrast, has elements of this but also has significant elements of transformation where the aim is to achieve a step change the way a system works, involving multiple actors in non-linear relationships, with a view to delivering a fundamental improvement in how information and advice is accessed and experienced. Theory of change-based evaluations which support learning, reduce important uncertainties, maintain a focus on achieving intended goals, and help align actors are (in my view) the way to go. However, knowing what needs to be done is not the same as successfully doing it.